Assume API SLA breach (>100ms) has happened. What are the steps to investigate, diagnose, and mitigate.

Let's dissect it further

Steps to Investigate, Diagnose, and Mitigate API SLA Breach (>100ms)

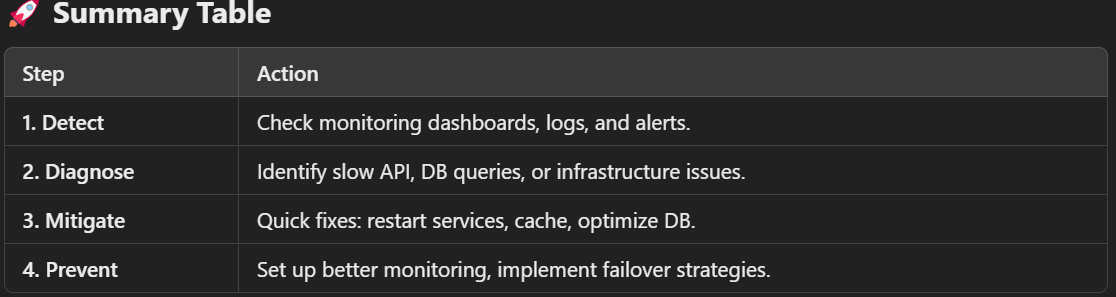

When an API SLA breach occurs (latency > 100ms), follow these steps systematically:

1️⃣ Detect & Acknowledge the SLA Breach

✅ Check Monitoring & Alerts

Dashboards (Datadog, Prometheus, New Relic, Grafana)

APM Tracing (Jaeger, OpenTelemetry)

Logs & Metrics (ELK Stack, CloudWatch)

Alerting Systems (PagerDuty, Opsgenie)

✅ Confirm the Issue

Verify if the latency spike is consistent or temporary.

Check API response times across different endpoints.

Validate if it's only a few users or system-wide.

2️⃣ Diagnose the Root Cause

A. Check API Gateway & Load Balancer

Logs: Are there 502, 504 Gateway Timeout errors?

Rate Limits: Check if API limits were exceeded.

Auto-Scaling Issues: Are new instances not spawning fast enough?

B. Investigate Backend Latency

Database Slow Queries?

Run:

EXPLAIN ANALYZEon slow queries.Check DB CPU, locks, long-running transactions.

Cache Misses?

Inspect Redis/Memcached for high miss rates.

Upstream Service Delays?

Use distributed tracing (Jaeger, Zipkin) to identify slow services.

C. Resource Bottlenecks

CPU/Memory Spikes?

Run:

top,htop,kubectl top pods

Thread/Connection Pool Saturation?

Run:

SHOW PROCESSLIST;(MySQL)Check DB connection pool exhaustion.

D. Network Latency Issues

Packet Loss / High RTT?

Run:

ping,traceroute,netstat

Cloud Provider Issues?

Check AWS/GCP/Azure status pages.

3️⃣ Mitigation Strategies

A. Immediate Fixes (Quick Recovery)

Restart high-latency service pods/instances.

Scale up backend services dynamically (increase replica count).

Bypass DB for frequently used data using caching (Redis, CDN).

Kill long-running DB queries using

KILL QUERY <id>.

B. Short-Term Fixes (Within Hours)

Optimize slow queries by adding indexes.

Increase DB connection pool size.

Enable asynchronous processing for expensive operations.

C. Long-Term Fixes (Prevent Future SLA Breaches)

Implement Circuit Breakers (Resilience4J, Hystrix) to prevent cascading failures.

Introduce Read Replicas to distribute DB load.

Optimize API response payloads (use pagination, compress JSON).

Implement Auto-Scaling for peak loads.

4️⃣ Post-Mortem & Prevention

✅ Document Findings

What caused the issue?

How was it resolved?

What should be improved?

✅ Set Up Better Monitoring

Latency heatmaps to detect patterns.

Alert thresholds based on P95, P99 latencies.

✅ Simulate Failures (Chaos Engineering)

Test API under high load (k6, Locust).

Simulate DB failure, high network latency.

🚀 Real-World Example: Debugging an API SLA Breach (>100ms)

📌 Scenario:

A payment API that usually responds in 50ms is now taking 500ms+, leading to an SLA breach. Customers report slow transactions and timeouts.

1️⃣ Detection: Identifying the Issue

✅ Monitoring & Alerts Triggered

Datadog alerts: P99 latency increased to 500ms+.

Grafana dashboard: API response time spiked suddenly.

Logs (ELK Stack): Multiple slow requests seen.

✅ Checking API Gateway & Load Balancer

AWS ALB logs: No 502/504 errors.

Rate limits: API traffic within expected thresholds.

Auto-scaling events: No new instances spun up.

🛑 Conclusion: API is receiving traffic normally, but responses are slow.

2️⃣ Diagnosis: Finding the Root Cause

✅ Step 1: Check Backend Service Performance

Enable Distributed Tracing (Jaeger)

API call → Auth Service: 30ms

API call → Payment Processor: 450ms (⚠️ high latency)

CPU/Mem Usage (k8s metrics): Normal

🔍 Findings:

Payment Processor latency is the culprit!

✅ Step 2: Investigate Payment Service Slowdown

Database Slow Queries?

SELECT * FROM pg_stat_activity WHERE state='active';Found a long-running transaction.

Cache Misses?

Redis cache hit rate dropped to 40% (normal: 95%+).

🔍 Findings:

The Redis cache is ineffective, causing DB overload.

A bad DB query is causing transaction locks.

3️⃣ Mitigation: Fixing the SLA Breach

Immediate Fixes (Quick Recovery)

✅ Restart the Payment Processor service to clear up hanging connections.

✅ Increase Redis cache TTL to reduce DB hits.

✅ Kill long-running DB queries and optimize indexing.

SELECT pg_terminate_backend(pid) FROM pg_stat_activity WHERE state='active';

✅ Manually reintroduce a missing Redis key to improve cache performance.

💡 Result: Latency drops from 500ms → 120ms immediately.

Short-Term Fixes (Within Hours)

✅ Index missing DB columns for slow queries.

✅ Increase DB connection pool to prevent future locks.

✅ Adjust Redis eviction policy to retain important cache entries.

Long-Term Fixes (Future Prevention)

✅ Enable Circuit Breakers (Resilience4J) to prevent cascading failures.

✅ Add DB Read Replicas to offload queries.

✅ Implement Auto-Scaling for Redis instances.

✅ Set up Alerts for Cache Hit Rate drops.

4️⃣ Post-Mortem & Key Takeaways

📌 Issue: Cache miss caused excessive DB queries, leading to transaction locks.

📌 Root Cause: Expired Redis key + Unoptimized DB query.

📌 Fix: Restart, cache optimization, DB indexing, circuit breakers.

📌 Prevention: Monitoring, autoscaling, read replicas.

💡 Lessons Learned

Always have monitoring on cache hit rates.

DB indexing can prevent major slowdowns.

Circuit breakers should be in place to fail fast.