Design a system to notify users when a certain stock price changes by a given delta.

Functional Requirements (FRs) for a system that notifies users when a certain stock price changes by a given delta

FR

1.Users should be able to register and log in securely using their credentials.

2.Users can subscribe to one or more stocks they want to monitor.

3.For each stock, users can specify:

Delta threshold (e.g., notify me when price changes by ±5%)

Direction (increase, decrease, or both)

4.Continuously fetch live stock prices from reliable market data providers/APIs (like NSE, BSE, or Yahoo Finance).

5.Compare the latest price with the last notified price or user’s set threshold.

6.Generate alerts when trigger conditions are met.

NFR

1.System should be highly scalable to support millions of users monitoring tens of thousands of stocks concurrently.

2.Stock price updates should be processed and alerts triggered within a few seconds (≤2–3s) of receiving data, ensuring minimum latency

3.System should be highly available ,services should be fault-tolerant with automatic failover and redundancy (multi-region or active-active setup).

4.Each event should be delivered at least once (with deduplication on consumer side).

5. System should maintain eventual consistency between stock data and notifications.

Estimates

1) Assumptions (base scenario)

Total registered users: 10,000,000

Active users during market hours: 10% → 1,000,000 active users

Average subscriptions (stocks) per active user: 5 → 5,000,000 subscriptions total

Distinct stocks monitored: 10,000 symbols

Average incoming price update rate per stock: 1 update / sec → 10,000 updates/sec

Average price update message size (symbol, price, ts, meta): 300 bytes

Average subscribers per stock = subscriptions / distinct stocks = 5,000,000 / 10,000 = 500 subscribers/stock2) Ingestion (raw market data)

Updates/sec = distinct stocks * updates/sec/stock =

10,000 * 1 = 10,000 updates/sec

Bytes/sec = updates/sec * message_size =

10,000 * 300 = 3,000,000 bytes/sec

Bytes/hour = bytes/sec * 3600 =

3,000,000 * 3600 = 10,800,000,000 bytes/hour

Bytes/day = bytes/hour * 24 =

10,800,000,000 * 24 = 259,200,000,000 bytes/day = 259.2 GB/day (decimal GB)

So: ~259 GB/day of raw update data. If you retain 24 hours of Kafka logs with replication factor 3: storage ≈ 259.2 * 3 = 777.6 GB.API’s

1. User Management Service

Handles user registration, authentication, and profile updates.

POST /api/v1/users/register

Registers a new user.

{

“name”: “Shashank”,

“email”: “shashank@example.com”,

“password”: “StrongPassword123”

}

response

{

“userId”: “u12345”,

“message”: “User registered successfully”

}

POST /api/v1/users/login

Logs in a user and returns an auth token.

Request:

{

“email”: “shashank@example.com”,

“password”: “StrongPassword123”

}

response

{

“token”: “jwt-token”,

“expiresIn”: 3600

}

GET /api/v1/users/profile

Fetch user profile details.

Headers: Authorization: Bearer <jwt-token>

Response:

{

“userId”: “u12345”,

“name”: “Shashank”,

“email”: “shashank@example.com”,

“notificationPreferences”: [”email”, “push”]

}

2. Stock Watchlist Service

Handles adding, updating, and removing stock subscriptions.

POST /api/v1/watchlist

Add a stock to user’s watchlist with delta threshold.

Request:

{

“stockSymbol”: “TCS”,

“deltaType”: “percentage”, // or “absolute”

“deltaValue”: 5, // 5% change

“direction”: “both”, // “increase” | “decrease” | “both”

“notificationChannel”: [”email”]

}

response

{

“subscriptionId”: “sub567”,

“message”: “Stock added to watchlist”

}

GET /api/v1/watchlist

Fetch all stocks the user is tracking.

Response:

[

{

“subscriptionId”: “sub567”,

“stockSymbol”: “TCS”,

“deltaType”: “percentage”,

“deltaValue”: 5,

“direction”: “both”,

“lastNotifiedPrice”: 3950.5,

“notificationChannel”: [”email”]

}

]

PUT /api/v1/watchlist/{subscriptionId}

Update delta threshold or notification preferences.

Request:

{

“deltaValue”: 10,

“direction”: “increase”,

“notificationChannel”: [”push”, “email”]

}

response

{

“message”: “Subscription updated successfully”

}

DELETE /api/v1/watchlist/{subscriptionId}

Remove a stock from watchlist.

Response:

{

“message”: “Subscription removed”

}

3. Stock Price Service

Handles fetching real-time or last known stock prices.

GET /api/v1/stocks/{symbol}

Get the latest price of a given stock.

Response:

{

“symbol”: “TCS”,

“currentPrice”: 3950.5,

“lastUpdated”: “2025-10-28T10:00:12Z”

}

GET /api/v1/stocks/trending

(Optional) Get top N stocks with highest percentage change for dashboards.

Response:

[

{ “symbol”: “RELIANCE”, “changePercent”: 3.2 },

{ “symbol”: “INFY”, “changePercent”: 2.9 }

]

4. Notification Service

Handles viewing and managing user notifications.

GET /api/v1/notifications

Fetch recent notifications for the user.

Response:

[

{

“notificationId”: “n789”,

“stockSymbol”: “TCS”,

“oldPrice”: 3900.0,

“newPrice”: 4100.0,

“changePercent”: 5.12,

“direction”: “increase”,

“sentAt”: “2025-10-28T10:02:11Z”,

“deliveryStatus”: “delivered”

}

]

PATCH /api/v1/notifications/preferences

Update preferred notification channels (email, push, SMS).

Request:

{

“notificationChannels”: [”email”, “push”]

}

response

{

“message”: “Notification preferences updated”

}

Databases

| Database | Purpose | Type |

| ---------------------------------------- | --------------------------------------------- | ----------------- |

| **UserDB (MySQL / PostgreSQL)** | Store user profiles and authentication info | Relational |

| **WatchlistDB (MySQL / PostgreSQL)** | Store user stock subscriptions and thresholds | Relational |

| **StockPriceStore (Redis / Cassandra)** | Store latest stock prices for quick lookup | In-memory / NoSQL |

| **NotificationDB (MongoDB / Cassandra)** | Store user notification logs and status | NoSQL |

| **Stream System (Kafka / Pulsar)** | Handle stock price updates & event streams | Distributed Log |

📘 1. UserDB (MySQL / PostgreSQL)

Table: users

user_id BIGINT PRIMARY KEY AUTO_INCREMENT

name VARCHAR(100)

email VARCHAR(100) UNIQUE

password_hash VARCHAR(255)

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP

updated_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP

Table: user_preferences

user_id BIGINT REFERENCES users(user_id)

notification_channels JSON -- e.g. [”email”, “push”]

timezone VARCHAR(50)

last_login_at TIMESTAMP

PRIMARY KEY (user_id)

📗 2. WatchlistDB (MySQL / PostgreSQL)

Table: stock_watchlist

subscription_id BIGINT PRIMARY KEY AUTO_INCREMENT

user_id BIGINT REFERENCES users(user_id)

stock_symbol VARCHAR(20) -- e.g. “TCS”, “RELIANCE”

delta_type ENUM(’absolute’,’percentage’)

delta_value FLOAT

direction ENUM(’increase’,’decrease’,’both’)

last_notified_price DECIMAL(12,2)

notification_channels JSON

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP

updated_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP

INDEX idx_user_stock (user_id, stock_symbol)

INDEX idx_stock_symbol (stock_symbol)

📙 3. StockPriceStore (Redis / Cassandra)

Redis Key Schema

Key: stock:<symbol>

Value:

{

“symbol”: “TCS”,

“current_price”: 3950.5,

“last_updated”: “2025-10-28T10:00:12Z”

}

Cassandra Table (if used)

CREATE TABLE stock_prices (

stock_symbol TEXT PRIMARY KEY,

current_price DECIMAL,

last_updated TIMESTAMP

);

📒 4. NotificationDB (MongoDB / Cassandra)

Collection / Table: notifications

notification_id UUID PRIMARY KEY

user_id BIGINT

stock_symbol VARCHAR(20)

old_price DECIMAL(12,2)

new_price DECIMAL(12,2)

change_percent FLOAT

direction VARCHAR(10) -- increase / decrease

sent_at TIMESTAMP

delivery_status VARCHAR(20) -- delivered / failed / pending

channel VARCHAR(20) -- email / push / sms

📕 5. Stream System (Kafka / Pulsar)

Topic: stock-price-updates

{

“symbol”: “TCS”,

“price”: 3950.5,

“timestamp”: “2025-10-28T10:00:12Z”

}

{

“userId”: “u12345”,

“stockSymbol”: “TCS”,

“oldPrice”: 3900.0,

“newPrice”: 4100.0,

“changePercent”: 5.12,

“direction”: “increase”,

“notificationChannel”: “email”

}

✅ Summary

Relational DBs → store users & subscriptions

Redis/Cassandra → real-time stock prices

Kafka → event streaming between ingestion & notification workers

MongoDB/Cassandra → durable notification history

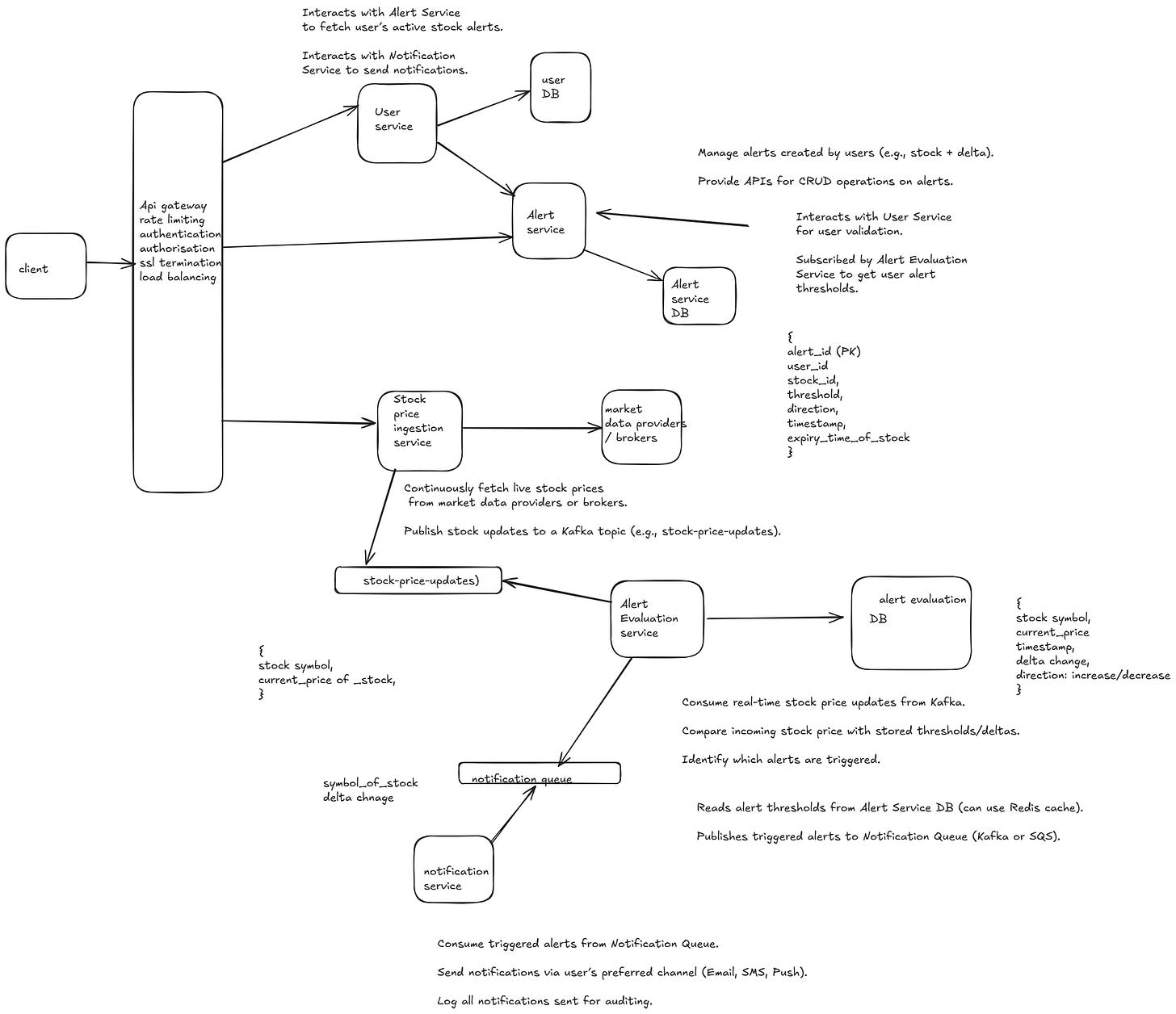

🧩 Microservices and Their Interaction

1. User Service

Responsibilities:

Manage user profiles and authentication.

Store user preferences like notification channels (Email, SMS, Push).

Interactions:

Interacts with Alert Service to fetch user’s active stock alerts.

Interacts with Notification Service to send notifications.

2. Stock Price Ingestion Service

Responsibilities:

Continuously fetch live stock prices from market data providers or brokers.

Publish stock updates to a Kafka topic (e.g.,

stock-price-updates).

Interactions:

Sends price change events to Alert Evaluation Service via Kafka.

3. Alert Service

Responsibilities:

Manage alerts created by users (e.g., stock + delta).

Provide APIs for CRUD operations on alerts.

Interactions:

Interacts with User Service for user validation.

Subscribed by Alert Evaluation Service to get user alert thresholds.

4. Alert Evaluation Service

Responsibilities:

Consume real-time stock price updates from Kafka.

Compare incoming stock price with stored thresholds/deltas.

Identify which alerts are triggered.

Interactions:

Reads alert thresholds from Alert Service DB (can use Redis cache).

Publishes triggered alerts to Notification Queue (Kafka or SQS).

5. Notification Service

Responsibilities:

Consume triggered alerts from Notification Queue.

Send notifications via user’s preferred channel (Email, SMS, Push).

Log all notifications sent for auditing.

Interactions:

Interacts with User Service for contact info.

Interacts with Notification Gateway or 3rd party APIs (Twilio, SES, FCM).

6. Notification Gateway (Optional Layer)

Responsibilities:

Unified interface for sending out different types of notifications.

Handles retries, rate limiting, and failures gracefully.

Interactions:

Called by Notification Service for actual message delivery.

7. Analytics & Monitoring Service

Responsibilities:

Collect metrics on alert triggers, delivery success, system latency.

Provide dashboards for operational visibility.

Interactions:

Subscribes to logs/streams from other services (e.g., Prometheus, ELK).

🧭 Interaction Flow Summary

User creates an alert →

User Service→Alert Servicestores it.Stock Price Ingestion Service receives live data → publishes to Kafka.

Alert Evaluation Service consumes events → checks deltas → pushes triggered alerts to Notification Queue.

Notification Service consumes queue → fetches user info → sends via Notification Gateway.

Analytics Service monitors system metrics and alert delivery rates.

HLD

🧩 Problem Context

In a stock price alert system, a user may get multiple notifications for the same trigger due to:

Duplicate events from Kafka / message queue (at-least-once delivery).

Service retries after a timeout.

Network or system failures causing partial processing.

To prevent this, we must ensure idempotency — i.e., sending the same notification once and only once, even if the same event is processed multiple times.

✅ Ways to Ensure Idempotency

1. Use a Unique Idempotency Key

Generate a unique key per alert-triggered event.

Store it before sending notification.

If the same key is seen again → skip re-sending.

Example Idempotency Key Format:

idempotency_key = user_id + “:” + stock_symbol + “:” + alert_id + “:” + price_change_timestamp

Workflow:

When an alert triggers, create this key.

Before sending notification, check in Redis or DB if this key exists.

If not → send notification and store the key with a TTL (say, 1 hour).

If yes → ignore duplicate.

Storage Example (Redis):

SETNX idempotency_key “sent”

EXPIRE idempotency_key 3600

2. Message Deduplication at Queue Level

If you’re using a queue like Kafka or SQS, use deduplication features:

AWS SQS FIFO Queue supports

MessageDeduplicationId— ensures same message isn’t processed twice.Kafka → implement deduplication consumer logic by tracking last processed offsets + message IDs.

3. Maintain Notification Logs (Persistent Store)

Store all successfully sent notifications in a DB table.

Use a composite primary key (user_id, alert_id, trigger_time) to enforce uniqueness.

If a duplicate event tries to insert → it fails silently.

Example Table:

CREATE TABLE sent_notifications (

user_id BIGINT,

alert_id BIGINT,

trigger_time TIMESTAMP,

PRIMARY KEY (user_id, alert_id, trigger_time)

);

Then, before sending:

INSERT INTO sent_notifications (...)

ON CONFLICT DO NOTHING;

4. Use Exactly-Once Processing in Stream Processor

If you use tools like Apache Flink or Kafka Streams, enable:

Checkpointing + State Store → ensures a message is processed exactly once even on retries.

But note: exactly-once semantics can add latency overhead.

5. Event Versioning

When an alert fires multiple times for the same user & stock, assign an incrementing event version or sequence number.

If a consumer sees an older version → it ignores it.

Example:

user_id=101, stock=GOOG, alert_id=555, version=3

Consumer ensures it always processes only the latest version.

⚙️ Typical Production Approach (Combined)

✅ Kafka → produces at-least-once messages

✅ Redis → idempotency key store

✅ Postgres → final notification log

✅ Retry logic with exponential backoff

✅ Observability to track duplicates

End-to-End Example

AlertEvaluationServicedetects delta hit for stockGOOG.It publishes event →

{

“user_id”: 101,

“alert_id”: 555,

“stock”: “GOOG”,

“price”: 2820,

“trigger_time”: “2025-10-28T17:20:00Z”,

“event_id”: “101-GOOG-555-20251028172000”

}

NotificationServiceconsumes event:Checks Redis for

event_id.If missing → sends email/SMS → stores event_id in Redis & DB.

If present → skips sending.

🏦 How Brokers Get Notified of Stock Price Changes

1. Primary Source — Stock Exchange Data Feed

Stock exchanges (like NSE, BSE, NASDAQ, NYSE, etc.) publish real-time market data feeds to registered brokers and data vendors.

There are typically two kinds of feeds:

Level 1 (Top of Book) — includes latest bid/ask and last traded price.

Level 2 (Depth of Book) — includes complete order book depth (multiple levels of bids/asks).

These feeds are extremely high-frequency (thousands of updates per second).

⚡ How Exchanges Push Updates to Brokers

Brokers receive price updates using low-latency, high-throughput channels, usually one of the following:

1. TCP/UDP Multicast Feeds

Most exchanges use multicast over dedicated leased lines for professional brokers.

Data is sent over private network connections, not over the public internet.

UDP multicast is chosen because:

It allows one-to-many broadcasting (one stream, many listeners).

Very low latency.

Scales well to thousands of subscribers.

Example:

NSE provides broadcast data feeds over leased lines using TCP/UDP multicast.

NASDAQ TotalView-ITCH also uses UDP multicast with recovery channels.

2. FIX Protocol (Financial Information eXchange)

A standard protocol used by brokers and exchanges for order execution, trade confirmations, and sometimes price updates.

FIX can work over TCP connections.

Used more for trading messages, not always for high-speed market data.

3. WebSocket / REST APIs

Used for retail brokers or fintech platforms (like Zerodha, Groww, Robinhood, etc.).

Brokers (who already receive data from exchanges via multicast or FIX) re-publish updates to their clients via:

WebSockets → for live streaming prices to client dashboards/apps.

REST APIs → for on-demand queries (historical data, snapshots).

Example:

Zerodha Kite API provides WebSocket for tick-level updates.

Alpaca, Interactive Brokers, Robinhood all offer WebSocket APIs.

🔁 Simplified Data Flow

[Stock Exchange]

↓ (UDP Multicast / FIX)

[Brokers / Market Data Vendors]

↓ (WebSockets / APIs)

[Retail Clients / Trading Apps / Alert Systems]

🧠 Why Not Direct WebSocket from Exchange?

Because:

Exchanges handle massive data rates (millions of updates/sec).

They can’t open individual WebSocket connections for each broker — that’s not scalable.

Hence, they provide a single multicast stream → brokers handle fan-out and resell via their own APIs.