Design control plane for a distributed database

Designing a Control Plane for a Distributed Database involves defining the orchestration and management layer that governs the behavior and configuration of the data nodes .

The control plane is not directly involved in data reads/writes, but it ensures that the underlying system remains reliable, consistent, available, and efficient.

FR

1. Cluster Management

Add/remove nodes dynamically (scale out/in).

Monitor node health and availability.

Node bootstrap and decommissioning logic.

Load balancing strategy updates.

2. Configuration Management

Propagate cluster-wide configuration changes (e.g., replication factor, sharding rules).

Version control of configuration with rollback support.

Zero-downtime configuration rollout.

3. Topology Management

Maintain consistent metadata of the cluster topology (shards, partitions, replicas).

Coordinate re-sharding and data rebalancing across nodes.

Maintain partition placement rules (e.g., anti-affinity).

4. Schema Management

Manage schema versioning and propagation across nodes.

Ensure schema compatibility before applying updates.

Allow for online schema evolution (e.g., adding columns, modifying indexes).

5. Security and Access Control

Manage authentication and authorization policies.

Coordinate certificate/key rotation for encrypted communication.

Define and enforce role-based access control (RBAC).

6. Backup and Restore Orchestration

Schedule and coordinate backups (full and incremental).

Maintain metadata for backup status and recovery points.

Support point-in-time recovery coordination.

7. Failure Detection and Recovery Coordination

Monitor node, partition, or replica failures.

Decide failover strategies (e.g., promote a follower to leader).

Initiate repair or recovery workflows.

8. Consensus and Coordination

Manage distributed consensus (e.g., Raft/Paxos) for leader election, configuration agreement.

Maintain metadata consistency using distributed coordination services (like etcd/Consul/ZooKeeper).

9. Telemetry and Metrics Collection

Aggregate and expose system-wide health metrics.

Alert on SLA breaches or abnormal behavior.

Log important control plane events and actions.

10. Upgrade Orchestration

Plan and coordinate rolling upgrades for nodes (binaries/config/schema).

Ensure version compatibility during transitions.

Gracefully handle downgrades in case of issues.

11. Data Placement Strategy Management

Support manual/automatic data placement based on locality, load, or fault zones.

Interface to update placement constraints and policies.

12. API for Admin Tools

Provide control APIs (e.g., REST/gRPC) for DevOps or UI dashboard.

Secure and rate-limit access to control plane APIs.

Optional (Advanced) Functionalities

Multi-Tenancy Control: Isolation and quotas for tenant-specific resources.

Policy Enforcement Engine: Define policies (e.g., geo-replication rules, TTLs) and enforce them across the cluster.

Cost-Based Optimization Tuning: Interface to provide feedback from query planner and tune cost models.

NFR

🔐 1. Security

Authentication & Authorization: Only trusted users and components should have access to the control plane APIs and interfaces.

Encryption: All communication (intra-cluster and external) should be TLS-encrypted.

Audit Logging: All actions (like schema changes, node additions) should be logged securely.

⚙️ 2. Reliability

High Availability: The control plane itself must be highly available (e.g., deployed as a replicated service with leader election).

Fault Tolerance: It should tolerate node failures without losing metadata or orchestration state.

Self-Healing: Detect and recover from partial failures (e.g., re-elect a leader, re-apply configs).

📈 3. Scalability

Should handle large-scale deployments (e.g., 10k+ nodes, 100k+ partitions).

Control logic (e.g., placement, rebalancing) must work efficiently at scale.

Horizontal scalability for control plane components if needed (e.g., microservices).

🕒 4. Consistency

Strong Consistency for Metadata: Changes to topology, configuration, and schema should be consistently reflected across all nodes.

Should rely on distributed coordination protocols (e.g., Raft, Paxos) to maintain global consistency.

🚀 5. Performance

Fast propagation of control changes (e.g., config updates, failover decisions).

Latency of control decisions (e.g., <100ms to react to failure).

Non-blocking control plane operations; they shouldn't degrade data plane performance.

🛠️ 6. Manageability

Easy to operate (with CLI, UI, or API).

Well-documented metrics and logs for debugging.

Support for dry-run mode before applying changes.

📊 7. Observability

Monitoring: Expose metrics (CPU, memory, number of nodes managed, etc.).

Logging: Centralized logs for all orchestration activities.

Tracing: Trace propagation of a config or schema change across the cluster.

🔄 8. Upgradability

Zero-downtime rolling upgrades.

Backward compatibility with older versions of data plane nodes.

Versioned APIs and state schema for safe migrations.

📦 9. Extensibility

Plug-in architecture for custom policies (e.g., custom placement strategy).

Support for external integrations (e.g., monitoring systems, cloud-native tools).

🌍 10. Geo-Distribution Awareness

Should support multi-region, multi-zone deployments.

Handle WAN latency, region-aware failovers, and zone-aware placement decisions.

Estimates

📏 Back-of-the-Envelope Estimation

Let's say you're planning for:

10,000 nodes

1 million partitions/shards

100,000 schema versions

1,000 backup schedules

1 year of audit logs

1 admin API QPS per cluster

Control Plane Traffic

Heartbeats:

10,000 nodes × 1 heartbeat every 5s = 2,000 requests/sec

Size per heartbeat: ~512B → ~1 MB/sec sustained read

Schema/config updates:

Rare but large fan-out (push to 10k nodes)

Config push: ~5 KB × 10k = 50 MB burst load

Orchestration Events:

Few per second, but CPU intensive

e.g., failover or shard rebalancing per 1000 nodes → assume 1-2/sec maxAPI’s

📘 1. Cluster and Node Management APIs

🔹 Register a New Node

POST /api/v1/nodes/register

request {

"node_id": "node-123",

"host": "10.0.1.12",

"zone": "us-east-1a",

"instance_type": "db.medium"

}

🔹 Get Cluster Topology

GET /api/v1/cluster/topology

🔹 Decommission a Node

POST /api/v1/nodes/decommission

{

"node_id": "node-123"

}

⚙️ 2. Configuration Management APIs

🔹 Update Global Config

PUT /api/v1/config/global

request {

"replication_factor": 3,

"consistency_level": "QUORUM"

}

🔹 Get Current Configuration

GET /api/v1/config/global

🧩 3. Schema Management APIs

🔹 Create or Update Schema

POST /api/v1/schema

{

"keyspace": "orders",

"table": "transactions",

"ddl": "CREATE TABLE IF NOT EXISTS transactions (id UUID PRIMARY KEY, amount DOUBLE, ts TIMESTAMP);"

}

🔹 Get Schema Version

GET /api/v1/schema/version?keyspace=orders&table=transactions

🔹 Rollback Schema

POST /api/v1/schema/rollback

request {

"keyspace": "orders",

"table": "transactions",

"version": "v12"

}

🔄 4. Orchestration APIs

🔹 Trigger Rebalance

POST /api/v1/orchestration/rebalance

Databases

🧩 1. Types of Databases to Use

Different components of the control plane have different needs (consistency, queryability, auditability), so polyglot persistence is recommended:

| Function | Recommended DB Type | Examples | Reason |

| ------------------------------------------ | ----------------------------- | ------------------------------------- | ---------------------------------------------------- |

| Metadata store (topology, configs, schema) | Strongly consistent KV store | etcd, Consul, Zookeeper, FoundationDB | Needs strong consistency, distributed coordination |

| Time-series & health metrics | Time-series DB | Prometheus, InfluxDB | Optimized for metrics and retention policies |

| Audit logs, event logs | Append-optimized DB or log DB | PostgreSQL, ClickHouse, Kafka | Good for append-only, analytical queries |

| Backup/restore metadata | Relational DB or KV store | PostgreSQL, MySQL, etcd | Requires relational integrity and time-based queries |

| Access control & users | Relational DB | PostgreSQL, MySQL | Complex relationships and querying |

📐 2. Database Schema Design (Relational or KV-backed)

Let’s assume we use PostgreSQL or MySQL for most relational parts and a distributed KV store like etcd for leader/config state.

🔸 A. nodes Table

Metadata of all registered nodes.

CREATE TABLE nodes (

node_id UUID PRIMARY KEY,

host VARCHAR(255),

zone VARCHAR(50),

instance_type VARCHAR(50),

status VARCHAR(20), -- e.g., ACTIVE, UNHEALTHY, DECOMMISSIONED

last_heartbeat TIMESTAMP,

created_at TIMESTAMP,

updated_at TIMESTAMP

);

🔸 B. partitions Table

Tracks ownership of each partition/shard.

CREATE TABLE partitions (

partition_id UUID PRIMARY KEY,

leader_node_id UUID REFERENCES nodes(node_id),

replica_node_ids UUID[], -- list of node_ids

keyspace VARCHAR(255),

table_name VARCHAR(255),

version INT,

status VARCHAR(20), -- LEADER, FOLLOWER, MOVING

updated_at TIMESTAMP

);

🔸 C. schemas Table

Stores table schemas and version history.

CREATE TABLE schemas (

schema_id UUID PRIMARY KEY,

keyspace VARCHAR(255),

table_name VARCHAR(255),

ddl TEXT,

version INT,

applied_at TIMESTAMP,

rollback_version INT,

created_by VARCHAR(100)

);

🔸 D. configurations Table

Stores global or scoped cluster configs.

CREATE TABLE configurations (

config_id UUID PRIMARY KEY,

scope VARCHAR(50), -- e.g., GLOBAL, NODE, ZONE

key VARCHAR(255),

value TEXT,

version INT,

created_at TIMESTAMP,

created_by VARCHAR(100)

);

🔸 E. audit_logs Table

Stores user/system actions.

CREATE TABLE audit_logs (

log_id UUID PRIMARY KEY,

actor VARCHAR(100),

action VARCHAR(255),

resource_type VARCHAR(50),

resource_id VARCHAR(100),

payload JSONB,

created_at TIMESTAMP

);

🔸 F. backups Table

Stores backup schedule and status metadata.

CREATE TABLE backups (

backup_id UUID PRIMARY KEY,

keyspace VARCHAR(255),

scope VARCHAR(50),

frequency VARCHAR(50),

retention_days INT,

status VARCHAR(50), -- e.g., SCHEDULED, IN_PROGRESS, FAILED

created_at TIMESTAMP,

last_run TIMESTAMP,

next_run TIMESTAMP

);

🔸 G. users, roles, and permissions Tables

users

CREATE TABLE users (

user_id UUID PRIMARY KEY,

username VARCHAR(100) UNIQUE,

password_hash TEXT,

email VARCHAR(100),

created_at TIMESTAMP

);

roles

CREATE TABLE roles (

role_id UUID PRIMARY KEY,

name VARCHAR(100),

description TEXT

);

user_roles

CREATE TABLE user_roles (

user_id UUID REFERENCES users(user_id),

role_id UUID REFERENCES roles(role_id),

PRIMARY KEY (user_id, role_id)

);

permissions

CREATE TABLE permissions (

permission_id UUID PRIMARY KEY,

role_id UUID REFERENCES roles(role_id),

action VARCHAR(100),

resource_type VARCHAR(100)

);

⚖️ Partitioning & Sharding (Optional for Scale)

For large deployments:

Partition

audit_logsandmetricsby time (monthly).Partition

nodes,partitions,schemasby zone or keyspace for faster lookups.Use connection pooling & query caching for API latency optimization.

ChatGPT said:

Designing the Control Plane of a Distributed Database as a set of microservices brings modularity, scalability, and isolation of concerns. Below is a breakdown of the core microservices and how they interact.

🧩 Microservices in the Control Plane

| Microservice | Responsibility |

| ------------------------ | ----------------------------------------------------------------------- |

| **Cluster Manager** | Node registration, heartbeat, topology updates |

| **Partition Manager** | Shard/partition placement, rebalancing, failover coordination |

| **Schema Service** | Schema versioning, validation, rollout, rollback |

| **Config Service** | Global/zone/node-level config distribution and versioning |

| **Backup Service** | Schedule, orchestrate, and track backups/restore jobs |

| **Security Service** | User auth, role-based access control (RBAC), certificate/key rotation |

| **Audit Service** | Centralized logging of all control actions for traceability |

| **Orchestration Engine** | Triggers and coordinates workflows (rebalancing, failover, upgrades) |

| **API Gateway** | Exposes REST/gRPC endpoints, rate limiting, auth enforcement |

| **Telemetry Service** | Aggregates and exposes metrics, node health, SLA violations |

| **Admin UI Service** | Frontend dashboard consuming control APIs for observability and control |

🔁 Interactions Between Microservices

Here’s a simplified interaction diagram in textual form (can be visualized later):

+-------------+ +------------------+ +-------------------+

| Admin/API | <------> | API Gateway | <-------> | All Services |

+-------------+ +------------------+ +-------------------+

|

v

+----------------+ +--------------------+ +---------------------+

| Cluster Manager| <--> | Partition Manager | <----> | Orchestration Engine|

+----------------+ +--------------------+ +---------------------+

| | |

v v v

+---------------+ +------------------+ +--------------------+

| Config Service| <--> | Schema Service | <---------> | Backup Service |

+---------------+ +------------------+ +--------------------+

+----------------------+ +---------------------+

| Security Service | <--> | Audit Service |

+----------------------+ +---------------------+

|

v

+----------------------+

| Telemetry Service |

+----------------------+

📌 Sample Use Case Flows

🔹 1. Node Registration

Node agent sends request → API Gateway → Cluster Manager

Cluster Manager updates topology (etcd) → Audit log generated

Telemetry service starts tracking node health

🔹 2. Schema Update

Admin sends schema update → API Gateway → Schema Service

Schema Service validates → persists in metadata DB → triggers config push to nodes via Cluster Manager

Schema version stored, audit log created

🔹 3. Partition Rebalancing

Partition Manager detects imbalance via metrics

Triggers Orchestration Engine → consults Config + Cluster Manager

Coordinates shard moves, updates topology

Audit log recorded, health monitored

🔹 4. Backup Trigger

Admin UI/API → Backup Service

Backup metadata stored → Job sent to relevant nodes

On completion → Audit entry logged, status exposed via telemetry

🛡️ Cross-Cutting Concerns (Handled by Sidecars/Middleware)

Logging/Auditing: All requests/events piped to Audit Service

AuthN/AuthZ: Handled at API Gateway and enforced at service level

Tracing/Monitoring: Exposed via Telemetry Service (Prometheus/OpenTelemetry)

Here's a detailed explanation of how each microservice interacts with the others in your control plane architecture diagram:

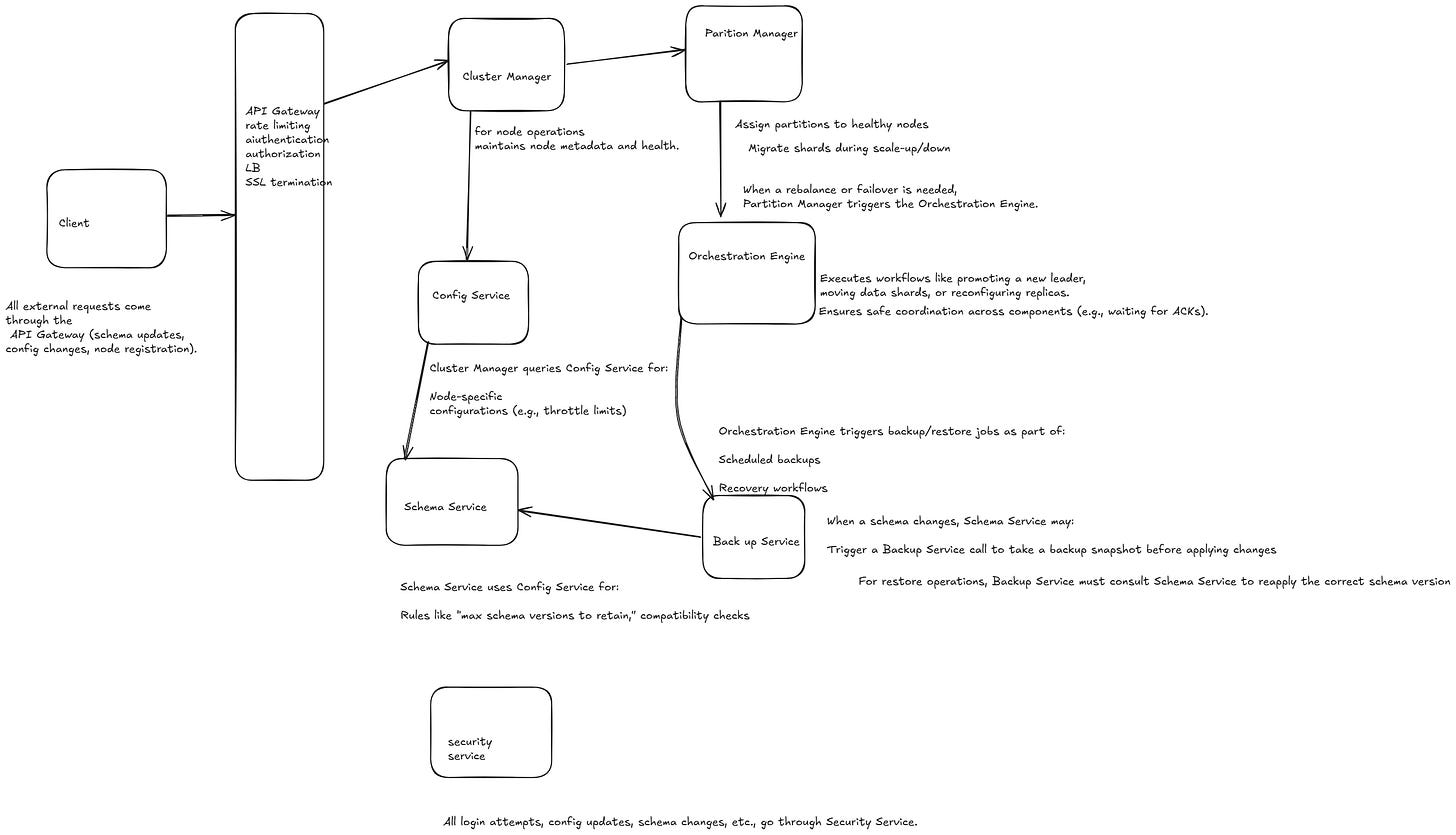

🔹 1. Admin/API ↔ API Gateway

All external requests come through the API Gateway (schema updates, config changes, node registration).

The API Gateway handles:

Authentication (with Security Service)

Rate limiting

Routing to appropriate microservices

🔹 2. API Gateway ↔ All Services

The API Gateway acts as a reverse proxy and forwards requests to:

Cluster Manager for node operations

Schema Service for schema updates

Partition Manager for rebalancing and topology

Orchestration Engine for failover/upgrade workflows

Config Service for global/node-level configuration

Backup Service for backup/restore operations

Security Service for managing users/roles

Telemetry Service for exposing system health

🔹 3. Cluster Manager ↔ Partition Manager

Cluster Manager maintains node metadata and health.

Partition Manager uses this data to:

Assign partitions to healthy nodes

Migrate shards during scale-up/down

Detect imbalances or under-replicated partitions

🔹 4. Partition Manager ↔ Orchestration Engine

When a rebalance or failover is needed, Partition Manager triggers the Orchestration Engine.

The Orchestration Engine:

Executes workflows like promoting a new leader, moving data shards, or reconfiguring replicas.

Ensures safe coordination across components (e.g., waiting for ACKs).

🔹 5. Config Service ↔ Cluster Manager & Schema Service

Cluster Manager queries Config Service for:

Node-specific configurations (e.g., throttle limits)

Schema Service uses Config Service for:

Rules like “max schema versions to retain,” compatibility checks

🔹 6. Schema Service ↔ Backup Service

When a schema changes, Schema Service may:

Trigger a Backup Service call to take a backup snapshot before applying changes

For restore operations, Backup Service must consult Schema Service to reapply the correct schema version

🔹 7. Orchestration Engine ↔ Backup Service

Orchestration Engine triggers backup/restore jobs as part of:

Scheduled backups

Recovery workflows

Pre/post deployment hooks

🔹 8. Security Service ↔ Audit Service

All login attempts, config updates, schema changes, etc., go through Security Service.

Actions are logged to the Audit Service, which persists:

User ID, action, timestamp, payload, and status

🔹 9. All Services ↔ Audit Service

Every control action (node join, schema push, config update) sends structured logs to Audit Service

Ensures traceability and compliance

🔹 10. All Services ↔ Telemetry Service

All services emit metrics to the Telemetry Service, which aggregates:

Node health, API latencies, heartbeat intervals

Schema rollout success rates, backup durations

Used by Admins/UI to monitor the control plane in real time

📌 Flow Example: Schema Update

Admin sends schema DDL → API Gateway → Schema Service

Schema Service validates → stores new version → sends update to Cluster Manager

Cluster Manager pushes update to nodes

Backup Service is optionally invoked for pre-change snapshot

Audit Service logs schema update

Telemetry Service records the rollout metrics

HLD