Different types Load balancers to be used

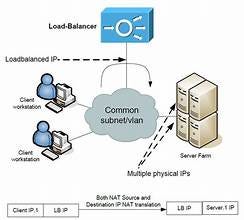

Load balancers distribute incoming network traffic across multiple servers to ensure reliability, scalability, and high availability of applications.

Types of Load Balancers

1. Based on Deployment Layer

a. Layer 4 Load Balancer (Transport Layer)

Operates at the OSI Model’s transport layer (TCP/UDP protocols).

Distributes traffic based on IP addresses and port numbers.

Efficient and lightweight; focuses only on connection-level information.

Example: Routing TCP packets based on a hash of the client IP.

Use Case: Applications requiring low latency, such as gaming or real-time systems.

Tools: AWS NLB (Network Load Balancer), HAProxy (Layer 4).

b. Layer 7 Load Balancer (Application Layer)

Operates at the application layer (HTTP/HTTPS).

Distributes traffic based on application-specific data (e.g., URLs, cookies, headers).

Supports advanced features like SSL termination, content-based routing, and caching.

Example: Directing

/imagestraffic to one server and/apito another.Use Case: Web applications requiring intelligent routing.

Tools: AWS ALB (Application Load Balancer), NGINX, Traefik.

2. Based on Traffic Distribution Algorithm

a. Round Robin

Distributes traffic sequentially to each server in a pool.

Simple and effective for servers with similar capabilities.

Use Case: Evenly distributed workloads without dependency on state.

b. Least Connections

Sends traffic to the server with the fewest active connections.

Ideal for scenarios with varying load per request.

Use Case: Systems where some requests require more processing time.

c. IP Hash

Hashes the client’s IP to determine the server.

Ensures requests from the same client are consistently routed to the same server.

Use Case: Applications requiring client session persistence.

d. Weighted Round Robin

Assigns weights to servers based on their capacity; higher-weighted servers receive more traffic.

Use Case: Heterogeneous server environments with varying capacities.

e. Random

Randomly selects a server for each request.

Use Case: Quick and simple balancing for low-traffic environments.

3. Based on Deployment Model

a. Hardware Load Balancers

Dedicated appliances for load balancing.

Provide high performance and reliability at the cost of flexibility and scalability.

Examples: F5 Big-IP, Citrix ADC.

Use Case: Enterprise data centers with high throughput requirements.

b. Software Load Balancers

Run on standard hardware and provide flexible, scalable solutions.

Examples: HAProxy, NGINX, Envoy.

Use Case: Modern, containerized, or cloud-native applications.

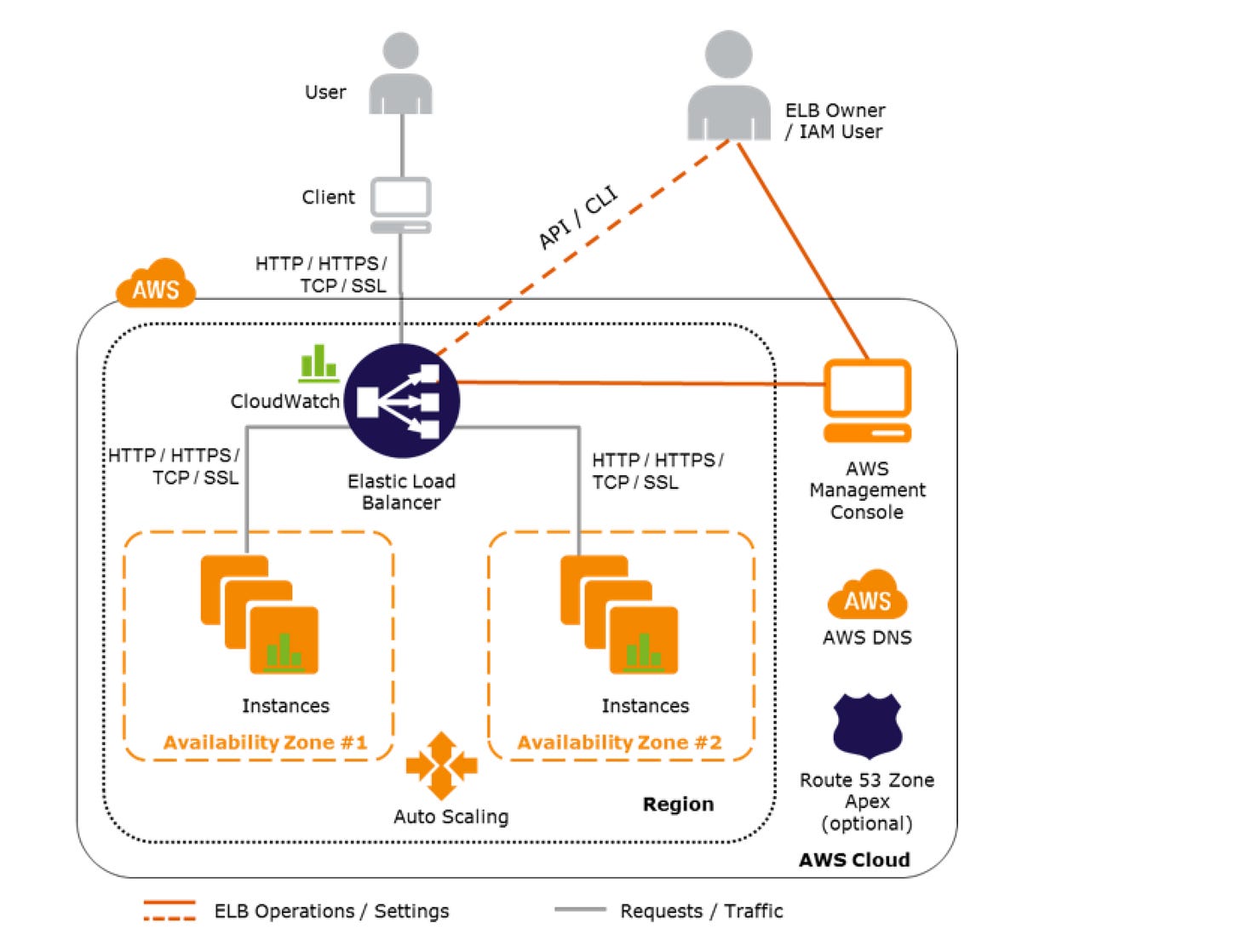

c. Cloud Load Balancers

Fully managed load balancing services provided by cloud platforms.

Examples: AWS ELB, Google Cloud Load Balancer, Azure Load Balancer.

Use Case: Applications deployed on cloud infrastructure.

4. Based on Functionality

a. Global Load Balancers

Direct traffic across multiple data centers or regions.

Use DNS-based load balancing or geo-routing for user proximity optimization.

Examples: Cloudflare Load Balancer, AWS Route 53.

Use Case: Multi-region deployments with a global audience.

b. Internal (Private) Load Balancers

Operate within private networks, distributing traffic among internal services.

Examples: Internal AWS NLB, Azure Internal Load Balancer.

Use Case: Microservices or internal API traffic routing.

c. External (Public) Load Balancers

Distribute incoming public traffic to backend servers.

Use Case: Public-facing applications, such as websites or APIs.

5. Based on Additional Capabilities

a. Reverse Proxy Load Balancers

Act as an intermediary between clients and servers, providing features like SSL termination and caching.

Examples: NGINX, Traefik.

Use Case: Web applications needing encryption and optimized performance.

b. Content Delivery Load Balancers

Distribute traffic based on content type or location.

Examples: Akamai, AWS CloudFront.

Use Case: High-performance static content delivery.

c. Stateful Load Balancers

Maintain session persistence by directing all requests from a client to the same backend.

Use Case: Applications requiring consistent client-server sessions, such as shopping carts.

Key Considerations for Choosing a Load Balancer

Scalability Needs: Can it handle increased traffic dynamically?

Latency Sensitivity: Does the application require ultra-low latency?

Application Type: Is it HTTP-based, TCP-based, or uses both?

Budget Constraints: Consider the cost of hardware versus software versus managed services.

Geo-Distribution: Are users or servers globally dispersed?

Here are examples of configurations for three common types of load balancers:

1. Layer 4 Load Balancer (TCP/UDP Load Balancing)

Example: Using HAProxy for TCP load balancing.

# Global settings

global

log stdout format raw local0

maxconn 4096

# Default settings

defaults

log global

mode tcp # Layer 4 (Transport Layer)

timeout connect 5s

timeout client 30s

timeout server 30s

# Frontend configuration

frontend tcp_frontend

bind *:80

default_backend tcp_backend

# Backend configuration

backend tcp_backend

balance leastconn

server app1 192.168.1.10:80 check

server app2 192.168.1.11:80 check

Features:

Traffic is balanced based on the least number of active connections.

Two backend servers (

192.168.1.10and192.168.1.11) handle TCP traffic on port80.

2. Layer 7 Load Balancer (HTTP/HTTPS Load Balancing)

Example: Using NGINX for HTTP load balancing with content-based routing.

# Load balancing settings

http {

upstream backend_pool {

server backend1.example.com weight=3; # Weighted round-robin

server backend2.example.com;

}

server {

listen 80;

# Content-based routing

location /api/ {

proxy_pass http://backend_pool;

}

location /static/ {

root /var/www/static; # Serve static files directly

}

}

}

Features:

Weighted round-robin is used to prioritize

backend1overbackend2./api/requests are routed to the backend pool, while/static/requests are served directly.

3. Cloud Load Balancer (AWS ALB - Application Load Balancer)

Example: Using AWS CLI to configure an ALB with path-based routing.

Step 1: Create Target Groups

aws elbv2 create-target-group \

--name api-target-group \

--protocol HTTP \

--port 80 \

--vpc-id vpc-12345678

Step 2: Create an Application Load Balancer

aws elbv2 create-load-balancer \

--name my-alb \

--subnets subnet-12345678 subnet-87654321 \

--security-groups sg-12345678

Step 3: Add Listener with Path-Based Routing

aws elbv2 create-listener \

--load-balancer-arn arn:aws:elasticloadbalancing:region:account-id:loadbalancer/app/my-alb/12345678 \

--protocol HTTP \

--port 80 \

--default-actions Type=forward,TargetGroupArn=arn:aws:elasticloadbalancing:region:account-id:targetgroup/api-target-group/12345678

Features:

Creates a target group and routes traffic based on HTTP paths.

Balances requests for

/apiacross the target group.

4. Content Delivery Load Balancer (CDN with Load Balancing)

Example: Using Cloudflare for load balancing.

Create a Load Balancer Pool:

Add backend servers (e.g.,

192.168.1.10and192.168.1.11) to a pool.Assign health checks to ensure availability.

Set Up Load Balancer Rules:

Route requests to the nearest available backend based on the user’s location.

Cloudflare Dashboard Configuration:

Go to the Load Balancers section.

Add a load balancer and configure:

Origin Pools: Define backend servers.

Proximity Routing: Ensure low latency by routing to the closest server.

Features:

Built-in DDoS protection.

Global routing for improved latency.

source:-wikipedia