How does Dynamo DB uses Multi-paxos for replication

Let's dissect

DynamoDB, inspired by Amazon’s Dynamo, uses a highly available and eventually consistent replication model. While Dynamo itself does not strictly use Paxos for replication, Amazon internally leverages Multi-Paxos (or similar quorum-based consensus protocols) for leader election and state management in its underlying storage systems, particularly in replicated write-ahead logging and metadata consistency.

How Multi-Paxos Works in DynamoDB's Replication

DynamoDB is a fully managed NoSQL database that provides eventual consistency by using replication across multiple availability zones (AZs) within a region. The core replication mechanism is based on quorum-based writes and reads rather than strict consensus, but Multi-Paxos plays a role in certain aspects:

Leader Election for Replicated Logs:

DynamoDB stores data across multiple nodes within a region.

Each storage node maintains a write-ahead log (WAL) to ensure durability.

A Paxos-based consensus mechanism is used to elect a leader among replicas.

The leader is responsible for managing the log and coordinating writes.

Write Propagation & Replication:

A write request first goes to the leader (determined via Paxos).

The leader appends the write to its log and then replicates it to followers.

Followers acknowledge once they persist the write.

The leader applies the changes and commits the transaction once a quorum of nodes acknowledge the write.

Ensuring Read Consistency:

By default, DynamoDB allows eventual consistency, meaning reads may return stale data.

If a strongly consistent read is required, the system ensures that the leader handles the read or checks quorum nodes for the latest data.

Fault Tolerance & Rebalancing:

If the leader node fails, Multi-Paxos ensures that a new leader is elected.

The remaining nodes continue to process writes without losing data.

This allows DynamoDB to maintain high availability.

Comparison with Dynamo's Original Quorum-Based Model

Classic Dynamo: Uses vector clocks + hinted handoff + quorum reads/writes for conflict resolution.

DynamoDB: Uses Paxos-like consensus for leader election in storage nodes while maintaining the quorum-based model for high availability.

Key Takeaways

Multi-Paxos is mainly used for leader election and log replication, not for each DynamoDB write.

DynamoDB optimizes availability by relying on quorum-based replication rather than strict consensus for every transaction.

Leader nodes ensure durability and consistency while replicas sync asynchronously.

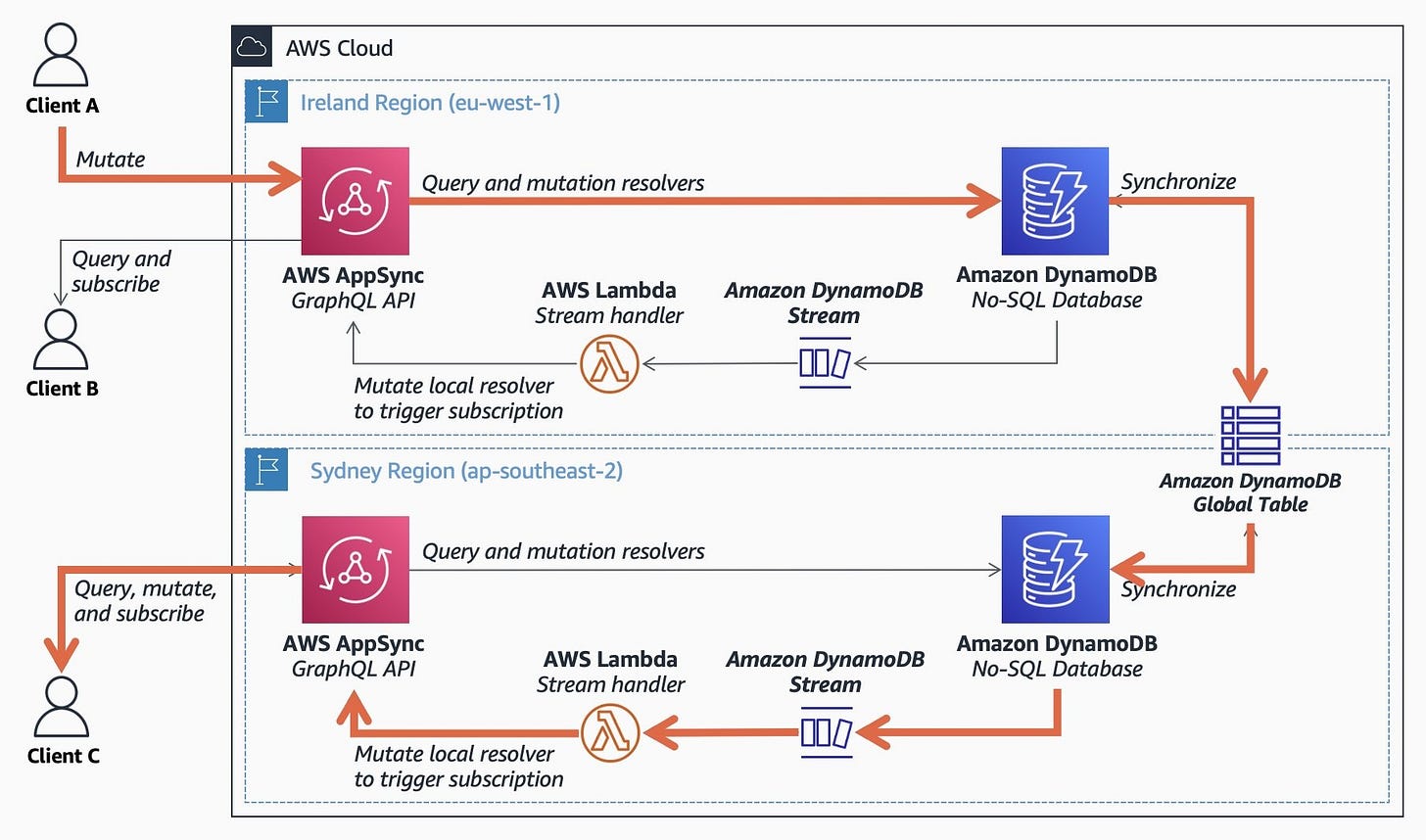

Cross-Region Replication in DynamoDB

DynamoDB supports global tables, which enable multi-region, multi-active replication. This means that writes can occur in multiple AWS regions simultaneously, and changes are replicated asynchronously across all regions.

How Does Cross-Region Replication Work?

DynamoDB achieves cross-region replication through asynchronous propagation of updates using an event-driven model rather than traditional consensus protocols like Paxos. However, consensus mechanisms like Multi-Paxos are still used within a region for leader election and log replication.

Multi-Region Writes (Global Tables)

Each replica in a different region maintains a local leader (determined by Multi-Paxos) to manage writes within the region.

Writes in one region are asynchronously propagated to other regions using DynamoDB Streams.

Updates are replicated in an eventual consistency model, meaning that conflicts can arise if the same item is modified in multiple regions simultaneously.

Conflict Resolution

DynamoDB uses last writer wins (LWW) by default.

If the same item is modified in multiple regions at the same time, the version with the latest timestamp is kept.

Applications needing more sophisticated conflict resolution can implement custom logic using DynamoDB Streams and AWS Lambda.

Replication Mechanism

When a write occurs in one region, it triggers a DynamoDB Stream event.

This event is processed asynchronously and forwarded to other regions.

The receiving region applies the update in its local storage.

Failure Handling & Leader Election

Each region functions independently for local availability, ensuring that even if cross-region replication is delayed, applications can continue writing.

If a node fails, Multi-Paxos is used within a region to elect a new leader and continue replication.

Read and Write Performance Considerations

Strong consistency is local to a region but cannot be guaranteed across regions due to the async replication model.

Applications that require low-latency reads should read from the nearest region.

Applications that require strict consistency across regions need to implement custom reconciliation logic.

Key Takeaways

Within a region: DynamoDB uses Multi-Paxos for leader election and log replication.

Across regions: DynamoDB does not use Multi-Paxos but instead relies on asynchronous log shipping via DynamoDB Streams.

Replication is eventual, meaning updates may temporarily diverge but eventually converge.

Conflict resolution follows last writer wins (LWW) unless custom logic is applied.

Optimizations & Trade-offs for High-Write Scenarios in Global Tables

DynamoDB’s Global Tables allow multi-region writes, but high-write scenarios introduce challenges like replication lag, write conflicts, and consistency trade-offs. Here’s how to optimize for performance while balancing these trade-offs.

1️⃣ Optimizing High-Write Throughput

1.1 Use Write Sharding to Distribute Load

Problem: A single partition key can become a hotspot (e.g., all writes go to a single region or partition).

Solution: Use write sharding by adding a suffix or prefix to partition keys.

Example: Instead of

user_123, useuser_123_0,user_123_1(with a random shard ID).Ensures writes are distributed across multiple partitions.

1.2 Optimize Write Capacity Mode

Option 1: On-Demand Mode

Best for unpredictable spikes in traffic.

AWS automatically scales based on load.

Option 2: Provisioned Mode with Auto-Scaling

Set read/write capacity units (RCUs/WCUs) with auto-scaling.

Avoids sudden throttling under high load.

1.3 Enable DynamoDB Accelerator (DAX) for Read-Intensive Workloads

DAX caches frequently accessed items, reducing read pressure on partitions.

Useful for reducing replication lag impact on reads.

2️⃣ Handling Write Conflicts & Ensuring Consistency

2.1 Use Vector Clocks for Custom Conflict Resolution

Problem: Last Writer Wins (LWW) can cause data loss if writes happen in multiple regions simultaneously.

Solution: Maintain versioning metadata using vector clocks.

Example: Each update carries a version

[Region: Counter], and conflicts can be resolved based on business logic.

2.2 Use Region-Specific UUIDs for Conflict Avoidance

Instead of overwriting values, append new versions with a UUID that includes region metadata.

{ "user_id": "123", "balance": 500, "txn_id": "us-east-1-4567" }

2.3 Use Conditional Writes for Conflict Prevention

Prevent overwrites by ensuring updates only happen if a condition is met.

dynamodb.update_item(

TableName='Orders',

Key={'order_id': '123'},

UpdateExpression='SET status = :new_status',

ConditionExpression='status = :expected_status',

ExpressionAttributeValues={

':new_status': 'shipped',

':expected_status': 'pending'

}

)

3️⃣ Reducing Replication Lag for Faster Multi-Region Updates

3.1 Prioritize Low-Latency Regions for Writes

DynamoDB replicates changes asynchronously, leading to lag.

Write-heavy workloads should:

Route writes preferentially to the closest region.

Use Route 53 latency-based routing.

3.2 Implement Change Data Capture (CDC) for Real-Time Sync

Use DynamoDB Streams + AWS Lambda to process changes immediately.

Example: Stream changes into an event queue (e.g., SQS or Kafka) for faster multi-region syncing.

4️⃣ Cost Optimization Strategies

4.1 Minimize Unnecessary Cross-Region Writes

Reduce replication costs by only replicating necessary attributes.

Example: Instead of full records, replicate only changed fields.

4.2 TTL (Time-to-Live) for Reducing Storage Costs

Expire old records to prevent unnecessary storage usage.

4.3 Use Compression for Large Payloads

Store compressed JSON or use binary serialization (e.g., Avro, Protobuf).

source:wikipedia