What is CDC and it's use cases

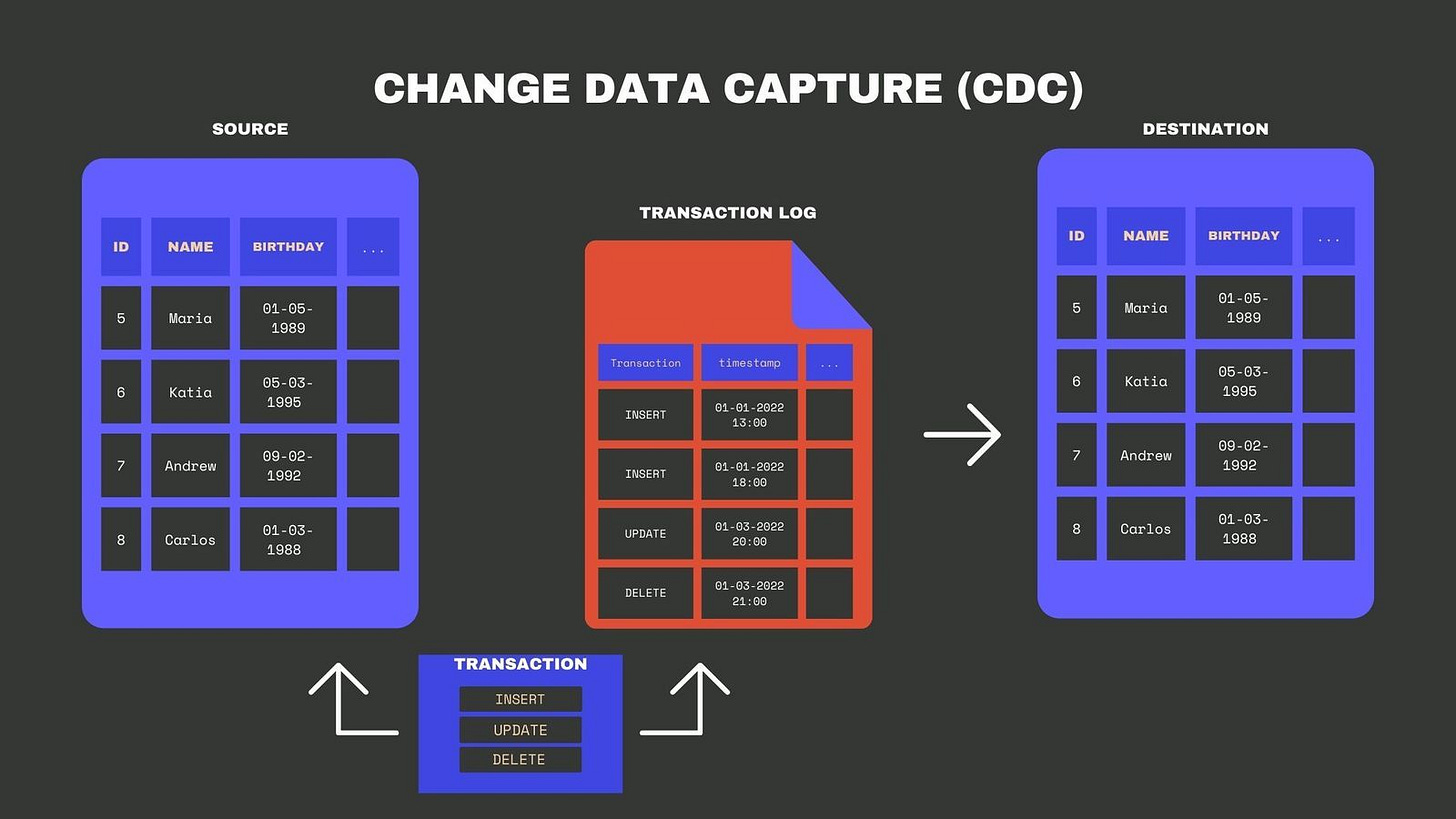

Change Data Capture (CDC) is a mechanism to identify, capture, and track changes (inserts, updates, and deletes) in a data source (typically a database) in near real-time.

CDC can be implemented using various tools and frameworks like Debezium, Kafka Connect, AWS DMS, and built-in database features like MySQL binary logs or PostgreSQL WAL (Write-Ahead Logging).

How CDC Works

Capture Changes: CDC captures changes to the source database (e.g., when new rows are inserted, updated, or deleted).

Transform (Optional): Changes may be transformed (e.g., reformatting, filtering) before they are sent downstream.

Deliver Changes: The changes are delivered to a target system, which could be another database, a data lake, a messaging system (e.g., Kafka), or an analytics platform.

CDC often operates by:

Listening to Logs: Tapping into transaction logs (e.g., MySQL binlog, PostgreSQL WAL).

Polling Tables: Periodically querying database tables to detect changes (less efficient).

Triggers: Using database triggers to capture changes (adds overhead).

Uses of CDC

CDC is a foundational technology for modern systems where real-time or near-real-time data replication and synchronization are critical. Here are the primary use cases:

1. Data Replication

Use Case: Synchronizing a primary database with a replica (or replicas) for high availability, failover, or disaster recovery.

Example: Keeping a read replica in sync with the main database.

2. Real-Time Analytics

Use Case: Sending real-time data to a data warehouse, analytics system, or stream-processing application for business intelligence or monitoring.

Example: Analyzing customer behavior in near real-time by feeding changes to a tool like Apache Flink or Snowflake.

3. Event-Driven Architectures

Use Case: Capturing database changes as events and propagating them via messaging systems like Apache Kafka for downstream processing.

Example: Triggering a workflow when a new order is placed or a user updates their profile.

4. Cache Invalidation

Use Case: Keeping distributed caches (e.g., Redis, Memcached) synchronized with the source database.

Example: Invalidating or updating cached entries when the corresponding data in the database changes.

5. Data Migration

Use Case: Migrating data from one database to another with minimal downtime by keeping the target database updated with the source's ongoing changes.

Example: Moving from on-premise databases to cloud-managed databases (e.g., MySQL to Amazon Aurora).

6. Microservices Data Sharing

Use Case: Sharing state changes between services in a microservices architecture.

Example: A customer service updating an email address triggers a notification service via CDC.

7. Building Audit Trails

Use Case: Keeping a historical log of all changes made to a database for compliance, debugging, or audit purposes.

Example: Tracking every update to customer account details.

8. Data Lake Updates

Use Case: Updating a data lake with incremental changes instead of performing bulk imports.

Example: Keeping an S3-based data lake synchronized with transactional updates from a relational database.

9. Backup and Restore

Use Case: Capturing ongoing database changes to keep a backup system in sync with minimal latency.

Example: Creating consistent backups without locking tables for long periods.

10. Master-Data Management

Use Case: Synchronizing changes to a master record across multiple systems.

Example: Ensuring a central "Customer Record" is consistent across CRM, billing, and support platforms.

Key Benefits of CDC

Low Latency: Enables near real-time data updates across systems.

Event-Driven: Transforms database changes into events for stream processing.

Minimized Impact: Reduces the load on the source database by reading logs instead of querying.

Scalable and Reliable: Facilitates horizontal scaling and decoupling of systems.

Limitations of CDC

Log Parsing Complexity: Requires support for transaction logs, which might not be available in all databases.

Performance Overhead: Improper configurations or excessive data change rates may impact database performance.

Schema Evolution Challenges: Changes to the source database schema need to be handled gracefully downstream.

Event Ordering: Ensuring the correct order of events, especially in distributed systems, can be complex.

Common CDC Tools

Debezium: Open-source CDC tool built on Kafka Connect, supporting databases like MySQL, PostgreSQL, MongoDB, and Oracle.

AWS DMS (Database Migration Service): Helps migrate databases with built-in CDC capabilities.

Oracle GoldenGate: A proprietary tool for Oracle database CDC and replication.

Kafka Connect: Provides connectors for CDC, like the Debezium connector.

StreamSets: Supports CDC pipelines for real-time data processing.

CDC in Real-World Systems

E-Commerce: Real-time stock updates or order status changes reflected across inventory, analytics, and user-facing apps.

Streaming Platforms: Synchronizing metadata and comments in real time for video or media applications.

Banking: Reflecting account balance changes across multiple services instantly.

Social Media: Propagating user activity (e.g., likes, shares, comments) for analytics and feeds.

CDC is a critical enabler for real-time data-driven systems, empowering modern architectures like microservices, event-driven applications, and distributed analytics.

2. How CDC Works

CDC can be implemented using various methods:

2.1. Log-Based CDC

Mechanism: Reads database transaction logs (e.g., MySQL binlog, PostgreSQL WAL, or Oracle redo logs).

Advantages:

High performance: No need for frequent table scans.

Low latency: Changes are captured as they happen.

Reliable: Captures every change in order and ensures transactional consistency.

Disadvantages:

Requires access to database logs (which might need administrative privileges).

Handling schema changes can be complex.

2.2. Query-Based CDC

Mechanism: Periodically queries the database tables to identify changes (e.g., comparing timestamps or incremental IDs).

Advantages:

Simpler setup: Works without access to transaction logs.

Database-agnostic: Works on databases without log-based CDC support.

Disadvantages:

Inefficient: High overhead on the database, especially for large tables.

Limited granularity: May miss changes between polling intervals.

2.3. Trigger-Based CDC

Mechanism: Uses database triggers to record changes in a separate table or directly push changes to downstream systems.

Advantages:

Precise: Captures all changes immediately.

Supports downstream delivery: Changes can be pushed directly to messaging systems or applications.

Disadvantages:

High overhead: Triggers can slow down database operations.

Complex management: Changes in triggers need careful handling during schema evolution.

2.4. Application-Based CDC

Mechanism: Change detection is built into the application logic.

Advantages:

Highly customizable: Tailored to application needs.

Disadvantages:

Developer effort: Requires changes to the application code.

Not database-agnostic: Tightly coupled to application logic.

3. Key Architectural Components

A typical CDC pipeline includes the following stages:

3.1. Change Detection

Monitors and captures changes at the source database.

Examples:

Log-based detection (e.g., Debezium).

Trigger-based detection.

3.2. Event Transformation

Transforms raw database changes into structured events.

Examples:

Mapping database operations (INSERT, UPDATE, DELETE) into events.

Adding metadata like timestamps, table names, or operation type.

3.3. Event Propagation

Sends the transformed events to downstream systems.

Examples:

Stream-processing systems like Kafka, Apache Flink, or Apache Pulsar.

Data warehouses like Snowflake or Google BigQuery.

3.4. Downstream Processing

Consumes CDC events for various purposes:

Data replication.

Real-time analytics.

Triggering workflows.

4. CDC with Debezium

Debezium is a widely used CDC tool built on Kafka Connect. It supports log-based CDC for databases like MySQL, PostgreSQL, MongoDB, and others.

Features of Debezium:

Transactional Consistency: Ensures events are delivered in the correct order with ACID properties.

Scalability: Can handle high-volume databases with minimal performance overhead.

Schema Evolution: Supports schema changes like adding columns.

How Debezium Works:

Connector: Connects to the database and monitors its transaction log.

Kafka Integration: Streams CDC events to Kafka topics for consumption.

Consumers: Downstream services consume these events for replication, analytics, or other use cases.

5. Use Cases in Detail

5.1. Real-Time Analytics

Scenario: An e-commerce company wants to track real-time sales trends.

Solution: Use CDC to stream order data from a transactional database to a real-time analytics system like Apache Flink or Snowflake.

Benefits: Enables dashboards to display up-to-the-minute sales trends.

5.2. Microservices Synchronization

Scenario: A customer microservice updates a user’s address, and this change needs to propagate to billing and shipping microservices.

Solution: CDC captures the change in the customer database and sends an event to Kafka, which other microservices consume.

Benefits: Ensures eventual consistency across microservices.

5.3. Cache Invalidation

Scenario: A web application caches product details in Redis, but the database is updated with new product prices.

Solution: CDC captures the database update and sends an event to invalidate the relevant cache entry.

Benefits: Keeps cache data fresh without complex custom logic.

5.4. Data Migration

Scenario: Migrating data from an on-premises database to a cloud database.

Solution: CDC keeps the target database updated with incremental changes while the migration is in progress.

Benefits: Minimal downtime during migration.

6. Challenges in CDC

6.1. Handling High Data Volume

Problem: Large-scale systems generate high volumes of database changes.

Solution:

Use partitioning strategies (e.g., topic partitioning in Kafka).

Filter unnecessary data at the CDC source.

6.2. Ensuring Event Ordering

Problem: Distributed systems can cause events to arrive out of order.

Solution:

Use unique transaction IDs to reconstruct order.

Leverage tools like Kafka, which provide ordering guarantees within partitions.

6.3. Schema Evolution

Problem: Database schema changes (e.g., adding/dropping columns) can break downstream systems.

Solution:

CDC tools like Debezium automatically detect schema changes.

Downstream systems must be designed to handle schema evolution gracefully.

6.4. Latency

Problem: Delays in propagating changes can impact real-time use cases.

Solution:

Optimize CDC pipelines for low latency.

Monitor pipeline health and address bottlenecks.

7. CDC in Modern System Design

CDC is a backbone for systems requiring data synchronization and event-driven architectures. Here's where it fits:

7.1. Event-Driven Architectures

CDC turns database changes into events, decoupling services in microservices architectures.

7.2. Data Lakes

CDC enables real-time data ingestion into lakes like AWS S3 or Azure Data Lake for machine learning or analytics.

7.3. Multi-Region Systems

Synchronizes data across geographically distributed systems with low latency.

Conclusion

CDC is a cornerstone technology for modern distributed systems, enabling real-time data pipelines, event-driven systems, and scalable architectures. Tools like Debezium, Kafka, and AWS DMS have made CDC more accessible and efficient. When designing a system with CDC, consider factors like performance, schema evolution, event ordering, and scalability to ensure robustness and reliability.

source :- wikipedia